Boto3 is the Amazon Web Services software development kit for Python, which allows Python developers to write software that makes use of services like Amazon S3 and Amazon EC2. Boto3 is maintained and published by AWS.

Please find latest documentation at : https://boto3.amazonaws.com/v1/documentation/api/latest/index.html

Command to install it : pip install boto3

Local storage Vs Cloud storage:

- Local file system is block oriented, means storage is divided into block with size range 1-4kb

- Collections of multiple blocks is called a file in local storage

- Example : 10MB file will be occupying almost 2500 blocks(assuming 4kb each block)

- We know that we can install softwares in local system (indirectly in blocks)

- Local system blocks managed by Operating system

- But Cloud storage is a object oriented storage, means everything is object

- No size limit, it is used only to store data, we can't install software in cloud storage

- Cloud storage managed by users

We need to install either Pycharm/Visual Studio code, install AWS plugins like AWS Core, AWS Toolkit etc., to write Python programs to connect to AWS using Boto3 package. I have installed VSC, and installed AWS Toolkit, AWS Boto3 plugins as shown in below image.

Once you installed above AWS plugins, you will see a AWS icon on the left hand side as shown in above screen shot. Click on AWS icon, screen will be as below.

Now, first thing we need is IAM credentials. We can get those from AWS IAM service. Follow below steps to get them.

- Login to your AWS account using your credentials

- Go to IAM service

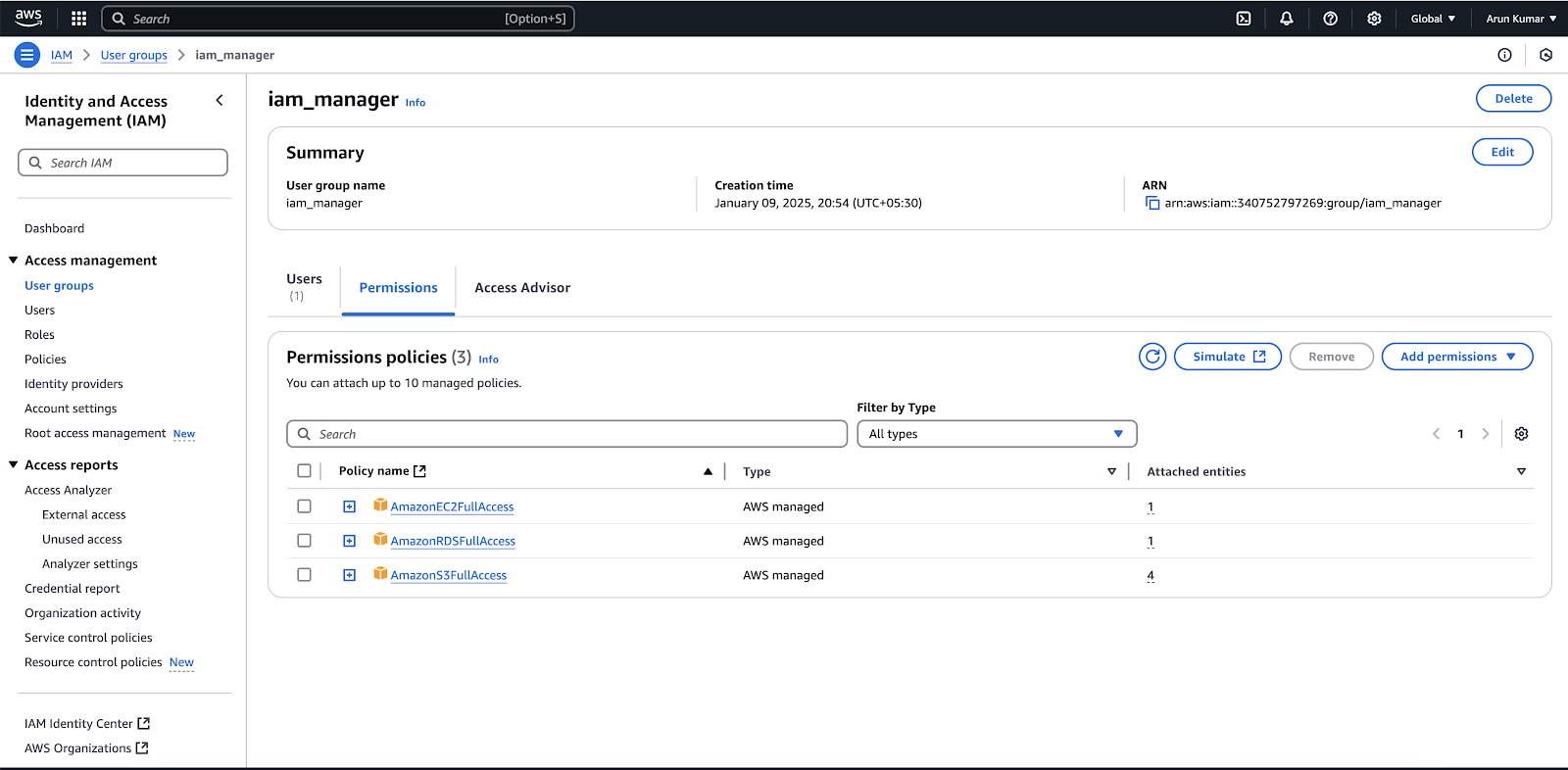

- Using "User Groups" options, create a group and assign full permissions for EC2, RDS and S3 services as shown below

- Now create a user under this group

- Done, now this user in a group which is having full permissions for required AWS services

- Click on "Create Acces key" and create Access key and Secret access key.

- First thing is we have to import boto3 package

- Next build a session for boto3 by passing AWS access key id, secret access key and region name of your AWS account

- Note this session is the type : <class 'boto3.session.Session'>

- Now create a S3 client using above session

- This client is of type : <class 'botocore.client.S3'>

- Now we have to get the list of all buckets using above client

- This list is of type : <class 'dict'>

Traceback (most recent call last):

File "/Users/Arunkumar_Mathe/Documents/boto3_project/boto3_module/boto3_examples.py", line 15, in <module>

response = s3.list_buckets()

File "/Library/Frameworks/Python.framework/Versions/3.9/lib/python3.9/site-packages/botocore/client.py", line 565, in _api_call

return self._make_api_call(operation_name, kwargs)

File "/Library/Frameworks/Python.framework/Versions/3.9/lib/python3.9/site-packages/botocore/client.py", line 1021, in _make_api_call

raise error_class(parsed_response, operation_name)

botocore.exceptions.ClientError: An error occurred (InvalidAccessKeyId) when calling the ListBuckets operation: The AWS Access Key Id you provided does not exist in our records.

- Managing AWS resources : Boto3 provides a simple and intuitive API for managing various AWS resources, such as EC2 instances, S3 buckets, DynamoDB tables, and more.

- Automating AWS workflows : With Boto3, you can automate complex workflows and processes involving multiple AWS services. For example, you can create a script that automatically launches an EC2 instance, sets up a database on RDS, and deploys a web application on Elastic Beanstalk.

- Data analysis and processing : Boto3 can be used to analyze and process large volumes of data stored in AWS services such as S3 and DynamoDB. You can use Boto3 to write scripts that read, write, and manipulate data stored in these services.

- Monitoring and logging : Boto3 can be used to monitor and log various AWS resources, such as EC2 instances, Lambda functions, and CloudWatch metrics. You can create scripts that automatically monitor these resources and alert you if any issues arise.

- Security and access control : Boto3 provides tools for managing security and access control in AWS. For example, you can use Boto3 to create and manage IAM users, groups, and policies, as well as to configure security groups and network ACLs.

# To create a bucket with name 'arun9705'

Arun Mathe

Gmail ID : arunkumar.mathe@gmail.com

Contact No : +91 9704117111

Comments

Post a Comment